October 4, 2024|11 min reading

NVIDIA's MaskedMimic: Revolutionizing Physics-Based Character Control

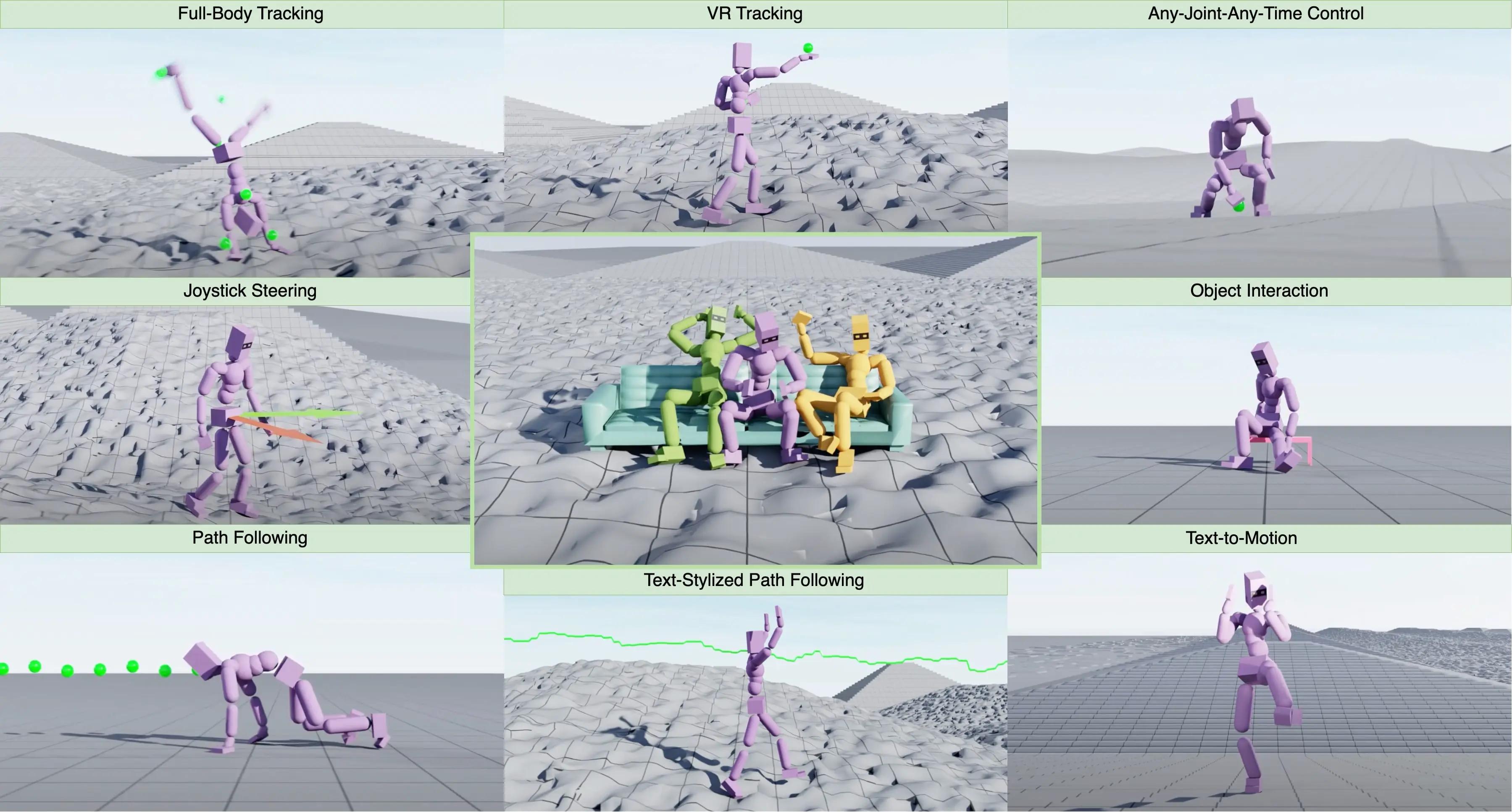

NVIDIA's recent groundbreaking research unveils the MaskedMimic system, a unified controller that promises to revolutionize the field of physics-based character animation. With capabilities stretching across diverse terrains and control modalities, MaskedMimic allows for intuitive, real-time generation of complex character motions based on partial motion data, text instructions, and environmental constraints.

This cutting-edge system marks a significant leap forward in creating more interactive, adaptable, and lifelike virtual characters. The ability to unify disparate control mechanisms under one model is a game-changer for industries relying on character animation, such as gaming, virtual reality (VR), and film production. MaskedMimic, developed by a team of researchers led by Chen Tessler, Yunrong Guo, Ofir Nabati, Gal Chechik, and Xue Bin Peng, exemplifies NVIDIA’s relentless pursuit of excellence in artificial intelligence (AI) and motion simulation technologies.

What is MaskedMimic?

MaskedMimic is a physics-based controller that synthesizes coherent and realistic animations by "inpainting" motion sequences from incomplete data. Whether the input comes from keyframes, partial motion capture data, or even simple text commands, the system fills in the gaps to create smooth and plausible character motions.

How MaskedMimic Simplifies Animation

This system simplifies the process of creating character animations, avoiding the need for complex reward engineering—typically a tedious part of controller development. It combines various forms of inputs, such as sparse joint positions, object interactions, or text-based commands, and produces seamless animations that respond fluidly to both the environment and user-defined goals.

Motion Tracking and Full-Body Control

One of MaskedMimic’s most notable applications is its motion tracking capability. The system can generate full-body animations using partial motion data, reconstructing a character’s entire movement from incomplete joint positions or VR headset inputs.

Full-Body Motion from Sparse Inputs

This functionality is pivotal in various applications, from gaming and VR experiences to movie production, where realistic, full-body motion is essential. In VR, for instance, only limited data from hand controllers and a headset may be available. MaskedMimic uses this sparse input to generate full-body motions that enhance immersion and realism in virtual environments.

Scene Retargeting and Adaptability

Additionally, MaskedMimic excels in scene retargeting, where the goal is to reproduce a character’s motion in different terrains. The system reconstructs motions, initially captured on flat surfaces, and adapts them to irregular or complex environments with striking accuracy.

Sparse Tracking and Intelligent Motion Reconstruction

Sparse motion tracking allows MaskedMimic to create a character’s full-body motion using only a few joint positions or sparse sensor data, making it highly efficient and adaptable. Imagine a scenario where only head and hand positions are provided—typical in many VR setups. MaskedMimic can interpret this minimal data and generate realistic motions such as running or performing a cartwheel, seamlessly filling in the missing details with physically plausible movements.

Advantages of Sparse Motion Tracking

The efficiency of sparse tracking significantly reduces the hardware requirements for realistic character motion generation, allowing developers to produce high-quality animations without the need for expensive, high-fidelity motion capture systems.

Goal Engineering: User-Customized Motion Generation

MaskedMimic introduces the concept of "goal engineering," where users can define logical constraints to direct a character's behavior. For instance, by setting a goal for a character to move to a specific point, reach an object, or interact with the environment in a particular way, the system can autonomously generate the appropriate animation to achieve that goal.

Flexibility in User-Defined Constraints

This flexibility is especially beneficial in interactive applications like video games or simulations, where character behaviors need to dynamically respond to changing user inputs or environmental factors. The system enables characters to adapt on the fly, performing complex tasks such as locomotion, object interaction, or even precise hand movements.

Locomotion: Realistic Movement Across Complex Terrains

One of MaskedMimic’s standout features is its ability to handle locomotion across diverse and uneven terrains. By constraining key points like the head’s position and orientation, the system generates natural walking, running, or even crawling motions that adapt seamlessly to various surfaces.

Terrain-Adaptive Character Motion

This capability is particularly important for creating immersive virtual worlds where characters must navigate unpredictable or challenging environments. MaskedMimic eliminates the need for extensive manual tweaking or environment-specific animation sets, allowing characters to move freely and naturally across any terrain.

Text-Based Control: Commanding Movements Through Language

MaskedMimic introduces a novel approach to character control through text commands, providing an intuitive way to generate animations without needing detailed joint data. For example, providing simple text inputs like "raise his hands and spin in place" or "play the violin" prompts the system to generate the corresponding motion in real-time.

Natural Language Commands for Realistic Motion

This text-based control method opens up exciting possibilities for user interaction, particularly in VR and gaming environments where players can control characters through speech or text inputs. The ability to interpret and respond to natural language commands adds a new layer of immersion and accessibility to virtual worlds.

Object Interaction: Adapting to Physical Properties of Objects

Another impressive capability of MaskedMimic is its ability to generate realistic object interaction motions. The system learns to handle objects in the scene based on their physical properties, such as weight, size, and position, and generates motions that align with these characteristics.

Generalization to Complex Scenes

For instance, when tasked with picking up or interacting with an object, MaskedMimic ensures that the character's movements reflect the object's properties, providing a more authentic and believable animation. Even more impressively, this capability generalizes across different terrains, allowing characters to interact with objects even in environments they were not specifically trained on.

Real-World Applications of MaskedMimic

The versatility of MaskedMimic makes it applicable across numerous industries. In gaming, for instance, the system allows for more responsive, lifelike characters that can adapt to player inputs in real-time. Players can experience smoother, more natural movements and interactions, heightening immersion and engagement.

Applications in Virtual Reality, Film, and Robotics

In VR environments, MaskedMimic enables more realistic body tracking, even with limited hardware, enhancing the user’s sense of presence and interaction within virtual worlds. Meanwhile, film production can benefit from faster, more cost-effective character animation without the need for extensive motion capture equipment.

Moreover, the system's ability to handle a wide range of inputs and tasks makes it a valuable tool for robotics and simulation, where realistic human or animal-like behaviors are necessary. The adaptability and efficiency of MaskedMimic in generating complex motions from sparse data could significantly reduce the resources and time required for training and developing robotic systems.

FAQs

What is MaskedMimic, and how does it work?

MaskedMimic is a physics-based controller developed by NVIDIA that generates coherent character animations from partial motion data, text commands, or object interactions. It works by "inpainting" missing motion data to create seamless, realistic character movements.

How does MaskedMimic handle motion tracking in VR?

MaskedMimic can infer full-body motions from limited VR inputs, such as data from hand controllers and headsets. By analyzing sparse sensor data, it generates lifelike body movements that enhance immersion in virtual environments.

What is goal engineering in MaskedMimic?

Goal engineering allows users to define specific goals or constraints for a character’s motion. For example, users can set a target location or object interaction, and the system will autonomously generate the necessary animation to achieve the goal.

Can MaskedMimic generate movements from text commands?

Yes, MaskedMimic can generate animations based on simple text inputs, such as "jump" or "spin around." This feature enables intuitive control of characters in virtual environments and games.

How does MaskedMimic adapt to different terrains?

MaskedMimic can generalize motions learned from flat terrains to irregular or complex environments. It adjusts a character's movements to fit the terrain, allowing for seamless locomotion across diverse surfaces.

What industries could benefit from MaskedMimic’s technology?

MaskedMimic is valuable for gaming, VR, film production, and robotics. Its ability to generate realistic, adaptive animations from sparse data makes it applicable to any field requiring lifelike character or robotic movements.

Conclusion

NVIDIA’s MaskedMimic sets a new standard in the world of physics-based character control. By providing a single, unified system capable of handling diverse control modalities—from motion tracking to text commands and object interactions—MaskedMimic allows for the creation of more lifelike and immersive characters than ever before. Its potential impact stretches across gaming, VR, film, and beyond, heralding a future where virtual characters can seamlessly adapt to complex environments and respond fluidly to user inputs.

Explore more

Sora Video Generation Launch: Revolutionizing Creative AI

Discover the groundbreaking Sora video product launch, redefining AI-powered creative tools for global users.

The Race for Artificial General Intelligence: Superintelligence and Society

Explore the debate on artificial general intelligence and superintelligence, featuring expert insights on its possibilit...

NVIDIA and Japan: Driving the AI Revolution in Industry

Explore NVIDIA's role in Japan’s AI revolution, from AI agents to robotics, reshaping industries and powering innovation...